In our previous post, we looked at the technical details of our AI Guided Spectrum operations approach. Today, in part two of our series, we will talk about our ethical approach to AI and how that approach impacts the end user’s ability to trust the system behavior.

The Need for AI in Spectrum Operations

New communications technologies have made the electromagnetic spectrum an increasingly dynamic environment. The expansion of cellular data transfer capabilities, the adoption of wireless Internet of Things (IoT) sensors, and the widespread introduction of self-driving/unmanned vehicles have resulted in a monumental increase of systems and stakeholders reliant on wireless communication.

Because of this growing complexity and dynamism, maintaining an adequate level of domain situational awareness requires systems that are equally (or, in some cases, more) dynamic and intelligent. Gone are the days when people could provide adequate awareness by manually monitoring the spectrum for emitters.

For these reasons, QRC is dedicated to applying artificial intelligence (AI)/machine learning (ML) best practices and fundamental research to integrate ethically sound autonomous capabilities into solutions that characterize, control, and dominate the electromagnetic spectrum.

What Is Ethical AI?

As AI’s influence on our decision-making and our perception of the world continues to grow, the discussion and practice of AI ethics must be prioritized. Merriam-Webster defines ethics as “the discipline dealing with what is good and bad and with moral duty and obligation.”[1] When we apply ethics to artificially intelligent autonomous and decision support systems, it’s important that we realize that the moral duty, obligation, and accountability does not shift to the systems in question, but remains with the human beings that create and use the systems.

The subject of trustworthiness is an essential part of any conversation involving AI ethics. By itself, an autonomous system is a non-moral agent that can make decisions. To avoid negligence that comes as a result of leaving decision-making tasks to a non-moral agent, we must see these technologies as extensions of the reasoning and will of the user. In short, we must trust that the decisions made are in line with the values of the individuals and organizations employing them.

For this to occur, we must trust the soundness of five aspects of an AI system:

- The learning methods, which transform training data into models used for further decision making

- The data, which serves as the basis for decisions that are made during system runtime

- The perception of the system, to accurately assess the current state by which decisions are made

- The execution of the system, where actions are taken by the system because it arrives at decisions in a responsible manner

- The security of the system, in cyber terms and in model, data, and algorithmic terms

QRC is committed to developing systems that meet or exceed the current understanding of AI/ML ethics. Our understanding of what constitutes an ethical system is three-fold:

- Transparent – We can trace how a model learns from data and can fully account for the reasoning behind the decisions made by a system. The training data is also transparent and readily inspectable.

- Understandable – We understand why the model made the decision that it did, based on the data provided. We also understand the data itself and the circumstances in which it was gathered, curated, and labeled.

- Auditable – The system must produce detailed logs to assess correct perception and execution.

Unsurprisingly, QRC’s approach closely follows the AI Ethical Guidelines being promoted by the Department of Defense. The five tenets for DoD Data Science follow:

- Responsible – Human beings should exercise appropriate levels of judgment and remain responsible for the development, deployment, and outcomes of DoD AI systems.

- Equitable – The DoD should take deliberate steps to avoid unintended bias in the development and deployment of combat or noncombat AI systems that would inadvertently cause harm to persons.

- Traceable – The DoD’s AI engineering discipline should be sufficiently advanced such that technical experts possess an appropriate understanding of the technology, development processes, and operational methods of its AI systems, including transparent and auditable methodologies, data sources, and design procedure and documentation.

- Reliable – DoD AI systems should have an explicit, well-defined domain of use, and the safety, security, and robustness of such systems should be tested and assured across their entire life cycle within the domain of use.

- Governable – DoD AI systems should be designed and engineered to fulfill their intended function while possessing the ability to detect and avoid unintended harm or disruption, and for human or automated disengagement or deactivation of deployed systems that demonstrate unintended escalatory or other behavior.

The Pitfalls Of Opaque Box Models

Models possessing barriers to transparency and understandability are generally referred to as “opaque box” models, as opposed to “transparent box” models, which are inherently self-descriptive and lend themselves to be understood via inspection.

With the abundance of supporting technology available, deep learning methods (AI/ML methods based on multilayer artificial neural networks, which seek to mimic the operation of a biological brain, to an extent) are popular for opaque box models. While deep learning methods certainly have their place in applications such as computer vision and hearing systems, they lend themselves to overuse due to widespread availability and ease of implementation.

To understand how or why a neural network provides an answer to a problem—although not impossible—would take an advanced engineer or mathematician a lot of time to reverse engineer the model (months or years in some cases). This can be quite troublesome when the model is not performing in an expected way. An aspect of a deep neural network that’s both a strength and a weakness is that it can model “hidden relationships” within the data, which may be so unconventional that a typical data scientist would be unable to find the anomalies without taking extraordinary measures.

The inability to view the mechanisms by which learning algorithms work, paired with the lack of fundamental understanding of training data, can lead to the development of ethically problematic systems.

For example, let’s say a bank builds an automated loan approval system that employs a neural network-based model to determine an individual’s creditworthiness. They train the system with historical data in the form of a spreadsheet of customer data points paired with labels of whether the individuals were approved or rejected. To make a valiant effort to be fair and nondiscriminatory, let’s say they even remove the ethnicity, first name, and last name from the data. Has the bank created a system free of past discriminatory lending practices?

Not likely. And even if they did, they couldn’t explain why.

It’s likely that during the learning process this system would simply look for other clues for the purpose of reducing the “error” between the input data (demographic and financial information) and the label (approval or rejection), thereby, in a way, justifying past discriminatory practices through other means.

QRC’s Transparent Box Approach To Classification

QRC employs and develops transparent box approaches to AI development, beginning with data understanding and developing well-defined and distinguishable features that produce the results needed.

Our process of developing any AI/ML-based capability starts with understanding the problem space and developing that understanding further by exploring that data. Next, we explore the data using a combination of statistical and unsupervised learning methods to develop well-defined and distinguishable features. After a sufficient and meaningful feature-set is developed, we employ and develop transparent, inspection-friendly transparent box machine learning techniques required to meet performance goals.

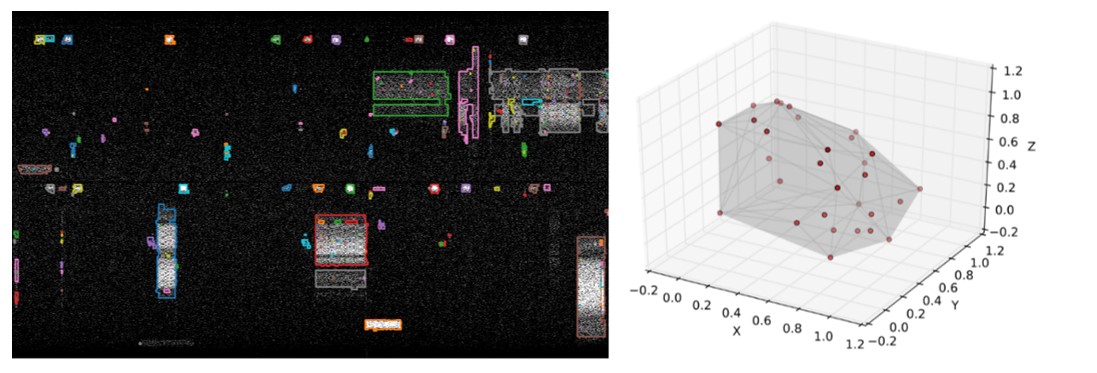

During the development of QRC’s c-UAS classification system, we followed our process, seeking to develop a transparent, understandable, auditable AI/ML-based decision support system. As a result, we ended up with a form of an intelligent agent, making use of an unsupervised, clustering, algorithm-based process with the following additional capabilities:

- Online learning – The ability to learn from its environment during operation

- Novel class detection – The ability to determine that certain types of unknown communications channels are in use

- Transfer learning – The ability to share the result of the system’s learning process with other systems, in whole or in part

- The ability to detect, classify, and learn from even the noisiest of environments

- The ability to detect, classify, and learn on systems with low size, weight, and power requirements

The astute reader may wonder if online learning capability is subject to bad actor inputs and how the system handles those conditions and other contentious environments. While this is an ever-evolving and challenging area to guard against, we have several techniques that we employ to identify such inputs and can even turn those into advantages.

In addition to drone classification, we’re applying these techniques to other problem spaces, such as modulation and protocol recognition, which serve as stepping stones to solutions that provide increasingly sophisticated capabilities.

Conclusion

When developing artificial learning systems, a better understanding of your data leads to better and more trustworthy systems. The creation of ethically sound AI solutions doesn’t happen by accident, nor does it lend itself to being added to the tail end of a development effort.

Innovation, in the form of developing our machine learning process, was neither a coincidence nor solely the result of engineering brilliance. QRC’s success is largely attributable to a sound, data-driven approach that fuses data science best practices with a dedication to forgoing an easier path in favor of one that better serves the need for transparency and accountability in autonomous systems.