The Distribution Digital Twin – A Key Enabler Of Grid Modernization

This is the fifth in my series of articles focused on the Modernization of the Electric Grid. This chapter extends the discussion from the previous article on the distribution system, and in particular the importance of a critical technology used in the planning, development, operation and maintenance of the system – the Digital Twin.

One of the most exciting, and rapidly advancing, technologies today is the Digital Twin (DT or Twin). The concept has wide application for industrial plants (manufacturing, oil and gas wells, refineries, generation stations), transportation infrastructure, military bases, and of course, utilities. The renewed emphasis on national infrastructure improvement and upgrades has many industries looking for new ways to most efficiently plan and manage these huge investments. In addition, asset owners seek better ways to document and maintain the information associated with these large scale infrastructure investments.

Parsons, for example, in partnership with a DT technology company, is supporting one of the largest airports in the Country with their first effort to create a modern digital twin of a recently renovated terminal, runway, and central utility plant. The airport will be better able to visualize and support asset operations as it also begins integrating more of its asset and information systems with the new platform.

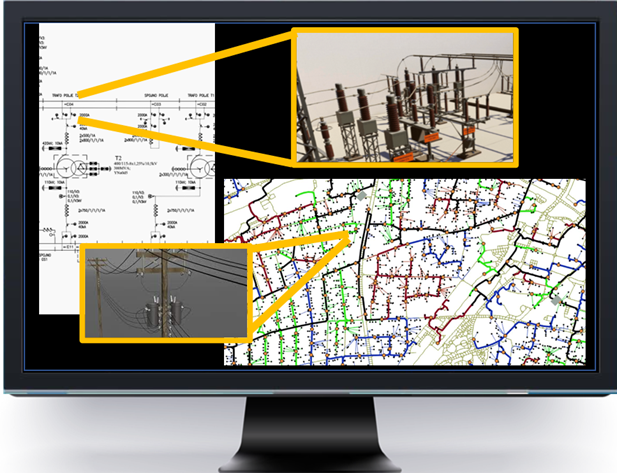

Digital twin is a relatively new term in the electric transmission and distribution (T&D) industry, building on the traditional concepts of maps, schematics and simple computer-based models. The modern digital twin not only encompasses a “digital” version of traditional “one-line” and “three-line” schematics and maps. It can also incorporate a full 3 dimensional (3D) geospatial model of the energized lines and equipment AND the structures supporting them. Electric power substations, for example, typically contain a lot of equipment, conductors, and control wiring packed into a relatively small space (though some substations are pretty huge!). The compact nature of the substation makes accurate measurement of clearances between equipment, steelwork, and conductor bus pipes critically important for reliability and worker safety. This is where a highly accurate 3D model of everything in the station can be extremely helpful for design engineers planning expansions, removals, or replacements of equipment.

Another, and I would argue more important, component of the digital twin is the tremendous amount of data related to all the underlying elements of the model, the asset ontology (relationship between the assets), AND the relationships between the elements and data sources. Aside from the myriad detailed attributes of each model element, the digital twin can include historical (and planned) model changes, equipment maintenance and inspection records, and live sensor data streams with measurement and status information from all across the grid. Even customer information, like how much power is consumed or supplied to the grid, can be part of the digital twin.

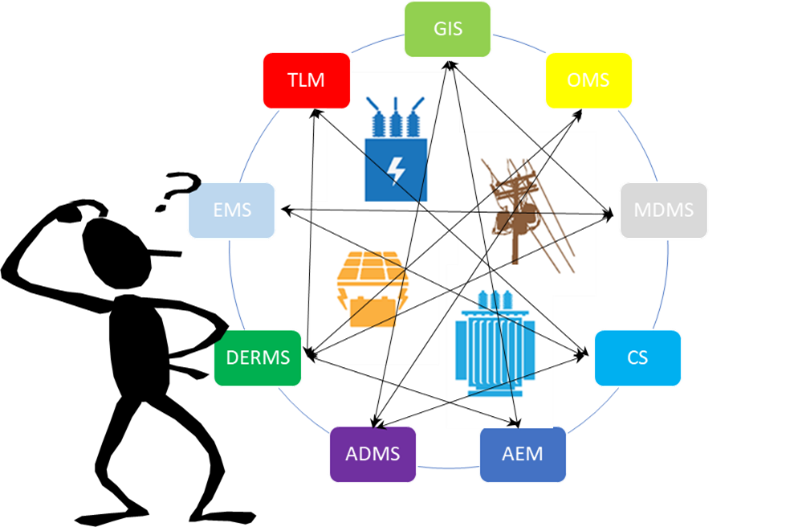

In the last blog article, I discussed several Information Technology (IT) systems that are becoming very important enablers of grid modernization. Advanced Distribution Management Systems (ADMS), Volt-Var Optimization (VVO) systems, and Distributed Energy Resource Management Systems (DERMS) all rely to some extent on elements of the distribution digital twin model. An accurate twin, important for the creation of these grid management systems, will indeed be critical for the safe and secure operation of the grid itself. Systems like an ADMS will have a “study mode” environment (a twin of the live model) used to check whether proposed changes or additions to grid configuration will work as planned. DERMS platforms will have similar environments to test demand response and DER dispatch events prior to initiation. In addition, all these systems will require robust cybersecurity algorithms, constantly monitoring thousands of sensors across the grid for live measurements and status, instantly alerting for unexpected conditions, or voltage or power levels that fall outside of expected tolerance ranges at any location. The configuration of the grid, as well as the physical and electrical locations of all sensors must be modeled exactly in the twin. This will make an accurate digital twin a truly mission critical requirement.

Another system that has become a very common customer-facing tool for many electric utilities is the Distributed Generation (DG) Hosting Capacity Map. This visual, geographic representation of the distribution system provides customers, regulators, legislators, and DG developers basic information about where siting DG resources will be least difficult (in other words, least expensive) for the grid to accommodate. The map display requires, at the least, a reasonably accurate GIS and distribution circuit-level knowledge of installed (and planned) DG connected to the system. It can be fairly simple in design, and in most cases today the maps are, but there can also be a tremendous amount of information and processing going on to create that display depending on the desire for accuracy and the ability to successfully produce that accuracy from elements of the digital twin. There are also powerful opportunities emerging to enhance the display to provide much more than just “hosting capacity”. Eventually, these maps will be able to inform where DERs can actually provide benefit to the grid. They can also be more interactive and help determine what capacity and mix of DERs (e.g., solar PV and battery storage) will provide the most benefit at any given location.

The engineering tools exist today to perform this type of analysis, and programming tools can be leveraged to automate the process. However, the most significant barrier to the implementation of systems like this (as well as ADMS, VVO, and DERMS) lies in the myriad details of the source databases required by these systems. The electric distribution digital twin, as it exists today in most electric utilities, is still far from being a complete and fully integrated system. The importance of accurate data sources, and the ability to create reliable connections between these sources, is being more acutely recognized by utilities every day as they try to meet the growing expectations of customers and regulators. In the process of implementing these new IT systems, the gaps in critical data sources, and especially the lack of automated connections between them, become obvious and cause delays and cost overruns. That is beginning to change, as this need for a better grid digital twin is impacted by the same forces pushing modernization of the actual grid.

Some of the key data sources making up the distribution digital twin are as follows:

- Geographic Information Systems (GIS) – Pretty much every electric utility now has some type of GIS, and many began as a scanned and digitized version of older paper maps. The GIS usually includes all or most of the facilities on the distribution system in a very structured database. It can include lots of attribute details (e.g., size, power ratings, voltage ratings, and of course geographic grid coordinates), and it is critical in describing how elements of the grid are connected to each other.

- Enterprise Asset Management (EAM) Systems – Many industries, including electric utilities, use some type of system to manage and track all of their major equipment, including scheduled maintenance, repairs, and upgrades of that equipment over time. These databases often contain important details about grid equipment that is critical to model-based engineering and operations systems.

- Customer Metering and Billing Systems – There are typically multiple IT systems included in this category, containing details about customer energy usage, power demands, meter types, rate plans, and the presence of local generation like rooftop solar (and even larger “utility scale” DERs). Advanced meters being installed across the Country are prompting the need for major upgrades in these systems, as the data requirements are significantly larger. A future blog article will focus on modernization at the “grid edge”, including the meter and customer side resources beyond the meter, and how they will play a large role in the future of the grid.

- Outage Management Systems (OMS) – This system is critical for day-to-day operation of the distribution grid and allows operators to troubleshoot and respond to outage events and other non-outage issues. It typically relies on the GIS for its “normal configuration” model and requires accurate customer-to-grid connection information. A closely related reporting database typically includes records for every “event” on the system, as well as complete outage histories for all customer locations.

- Protection Systems – There are many pieces of equipment (e.g., breakers and reclosers) on the distribution grid that require specifically designed settings for proper coordination with other devices, so that system faults are isolated as quickly as possible, and with minimal disruption to customers.

- Substation Meter Historians (and other grid sensors) – The number of meters and other sensors which continuously monitor grid conditions is rapidly expanding. The databases for storing this stream of data, and the systems used to browse, search, and summarize this data, are becoming extremely important to electric utilities, especially as the distribution grid becomes more dynamic with the integration of intermittent DER. New analytics tools are emerging to take advantage of this massive amount of data and help grid operators and engineers detect and troubleshoot problems, as well as provide valuable information for improved grid design going forward.

- Other Engineering Databases – Over the past few decades, electric utility engineers have used spreadsheets and customized databases to store all kinds of important grid data. (I know this, because I was one of them!) These unique solutions evolved as the simplest and most efficient ways to document and manage information at the local level. However, since few people did things exactly the same way, and many people wanted their own solution, this created a challenge for the company to find that “single source of truth” for any piece of information. Now, many utilities are working to incorporate this data into more formal and robust enterprise databases, like those listed above, so that the correct information can be leveraged automatically by other systems. This is often a slow, painstaking effort, akin to the challenges I faced earlier in my career searching through paper records stored by my predecessors to create those awesome spreadsheets.

Establishing an accurate and fully integrated system of databases like this into a digital twin is a daunting task, especially for any large utility. Many large utilities have several tens of thousands of miles of distribution lines, hundreds of substations, several thousand circuits, and hundreds of thousands of transformers supplying millions of customers. Much of this infrastructure has been installed, repaired and replaced multiple times over the last century, and every day this continues to pieces of the grid all over the Country. It truly is a gigantic machine that is in a constant state of upgrade and repair.

Over the years, documentation of all the details of this infrastructure has occurred in a wide variety of ways. It is important to remember that most large utility companies were created through mergers and takeovers of smaller companies, each of which were also likely formed the same way. Each of these smaller companies had their own unique record keeping systems, naming conventions, construction practices and standard equipment types. Little of that changes quickly, if ever, after a merger. Paper records have been digitized over the last few decades, again in a variety of ways, from spreadsheets to complex (and often proprietary) database systems. Paper maps have also been digitized into GIS databases, however that data is only as good as what was on the paper to begin with. Getting all of this data into consistent, complete, and accurate databases, which can be efficiently integrated together, has been a long and difficult struggle for most utility companies. Much of the cleanup requires brute force methods like comprehensive field inspections and manual record updates (mass data entry efforts) requiring lots of skilled workers.

Beyond this effort, maintaining data integrity is also a big challenge. Effective “data governance” within any large utility requires a clear understanding of who is responsible for the accuracy and completeness of each and every data element that gets documented during the course of day-to-day business. This may not sound difficult on the surface, but one must realize that many of the large information systems at the core of the distribution digital twin have interaction with many people across multiple departments and multiple work processes. Moreover, proper and accurate documentation of asset installation details, while always important, can sometimes suffer due to having lower priority than attention to project budget and schedule.

The electric utility industry is beginning to explore potential data management solutions for an accurate digital twin. An example includes work led by Electric Power Research Institute (EPRI), an independent non-profit industry organization. EPRI has been collaborating with a few dozen utilities and software vendors on a Grid Model Data Management (GMDM) initiative – an effort to streamline the production and maintenance of accurate, accessible grid models. This research, still in early stages of adoption across the industry, also includes the use of an open standard format for model data called the Common Information Model (CIM), and an enterprise-wide model data management “information architecture”, based on industry best practices, to reduce the labor associated with accumulating, validating, synchronizing, and correcting model data for every IT system. A major benefit of this effort would be a single “common” data format that all model-based simulation and analytics tools could be designed to support. Software vendors could design products for this one standard, rather than having customized solutions developed for each product at each utility company. The effort to achieve this vision is not trivial, but when compared to the accumulated efforts of all companies across the industry to prepare model data, implement unique product data integrations, and then maintain those unique integrations over time….it would seem an industry standard format and practice is a no-brainer.

As more important applications using the distribution digital twin evolve and become critical for day-to-day operations, utilities are realizing the need for more robust processes for records documentation, and new oversight and inspection processes to ferret out bad or missing data. The need for improved clarity and formalization around data governance is also becoming obvious to utility executives as their companies embark on large, state regulator driven IT projects supporting highly visible and politically sensitive grid modernization initiatives. Digital twin technology, whether it be a small scale home-grown custom integration or a large, enterprise-wide comprehensive single solution (offered by a growing list of vendors), will be a key enabler for all of these initiatives.